TL;DR: We introduce a principled way to encode geometric structure of tokens into vision transformers.

Abstract

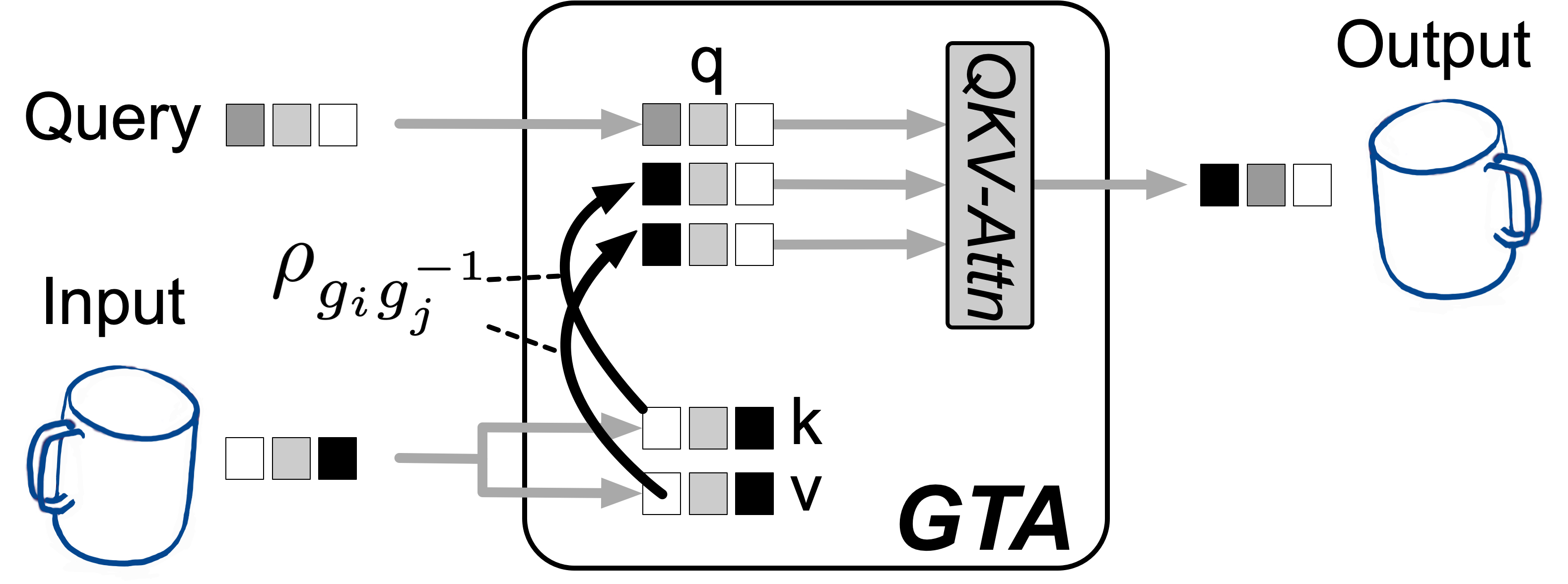

As transformers are equivariant to the permutation of input tokens, encoding the positional information of tokens is necessary for many tasks. However, since existing positional encoding schemes have been initially designed for NLP tasks, their suitability for vision tasks, which typically exhibit different structural properties in their data, is questionable. We argue that existing positional encoding schemes are suboptimal for 3D vision tasks, as they do not respect their underlying 3D geometric structure. Based on this hypothesis, we propose a geometry-aware attention mechanism that encodes the geometric structure of tokens as relative transformation determined by the geometric relationship between queries and key-value pairs. By evaluating on multiple novel view synthesis (NVS) datasets in the sparse wide-baseline multi-view setting, we show that our attention, called Geometric Transform Attention (GTA), improves learning efficiency and performance of state-of-the-art transformer-based NVS models without any additional learned parameters and only minor computational overhead.

Method

Our proposed method incorporates geometric transformations directly into the transformer's attention mechanism through a relative transformation of the QKV features. Specifically, each key-value token is transformed by a relative transformation that is determined by the geometric attributes between query and key-value tokens. This can be viewed as a coordinate system alignment, which has an analogy in geometric processing in computer vision: when comparing two sets of points each represented in a different camera coordinate space, we move one of the sets using a relative transformation to obtain all points represented in the same coordinate space. Our attention performs this coordinate alignment within the attention feature space. This alignment allows the model not only to compare query and key vectors in the same reference coordinate space, but also to perform the addition of the attention output at the residual path in the aligned local coordinates of each token due to the value vector's transformation.